Meta, the parent company of Facebook and Instagram, is testing facial recognition technology as part of its efforts to tackle the increasing issue of fake celebrity ads, often referred to as “celeb-bait” scams. These scams have been used by cybercriminals to impersonate well-known figures, tricking users into sharing personal information or money. With a user base nearing 4 billion, Meta is taking a significant step to protect both its platform users and celebrities from these deceptive practices.

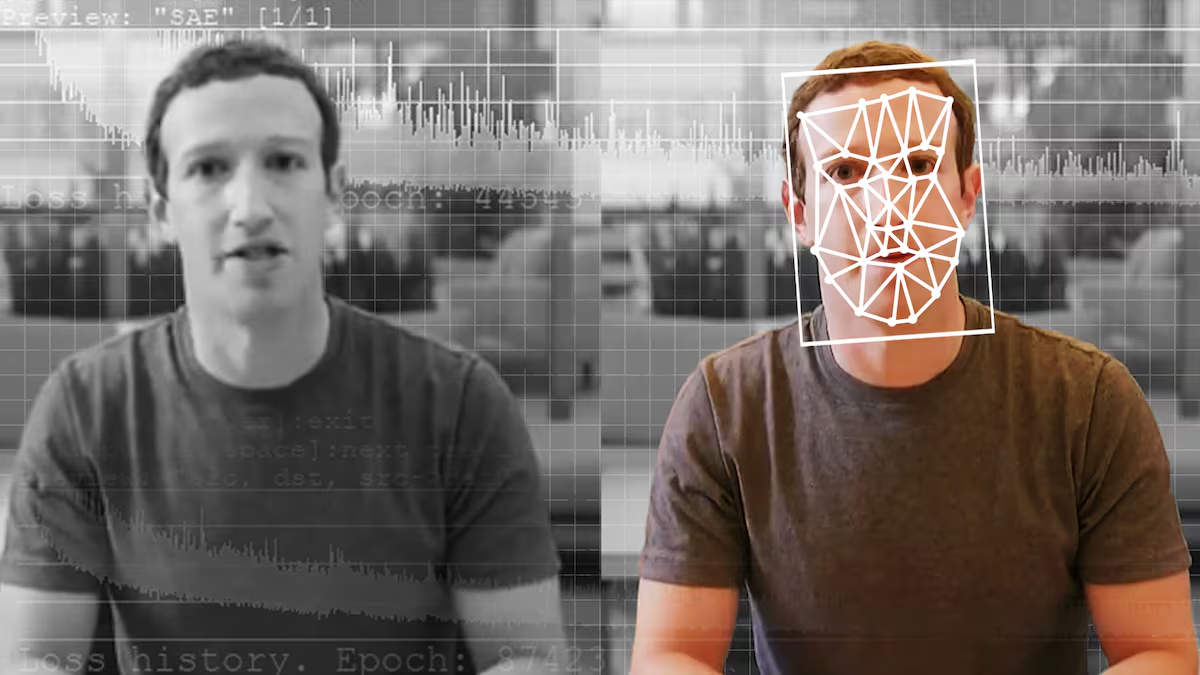

How Meta’s Facial Recognition Technology Works?

Meta’s new system compares images in advertisements with the official profile pictures of celebrities on Facebook and Instagram. If the system detects a match and determines that the ad is a scam, it will immediately block the ad. This initiative, currently in its early stages, has already produced promising results in a pilot test involving a small group of celebrities.

In the coming weeks, Meta plans to expand the testing to include 50,000 public figures and celebrities. This move is designed to prevent the spread of misleading ads that exploit the identities of high-profile individuals, including Elon Musk, Oprah Winfrey, and Australian billionaires Andrew Forrest and Gina Rinehart, who have all been impersonated in fraudulent ads.

Addressing “Celeb-Bait” Scams:

The rise of deepfake technology has made it easier for scammers to create convincing ads featuring well-known figures, tricking unsuspecting users. Meta refers to these types of scams as “celeb-bait,” which violate the company’s policies. These scams have become more sophisticated over time, leading to significant financial losses and damage to the reputation of both the platform and the impersonated individuals.

Meta’s new facial recognition tool is part of its broader strategy to fight cybercrime and improve platform security. The company has been under pressure to address these issues, as the damage caused by celeb-bait scams continues to grow.

Privacy Concerns and Legal Challenges:

While the new technology has shown early promise, Meta must navigate concerns related to privacy and biometric data. The company recently faced a $1.4 billion settlement with Texas for using the biometric data of residents without proper legal authorization. As a result, Meta has stated that it will delete any facial recognition data generated when identifying whether an ad is a scam. This approach aims to balance the need for security with user privacy.

Additionally, Meta will soon begin notifying celebrities who are targeted by scam ads. These notifications, sent via in-app messages, will inform them that they have been enrolled in the facial recognition protection measure. Celebrities will have the option to opt-out of this feature if they choose.

Verifying Identity and Regaining Access:

Apart from combating fake celebrity ads, Meta is also exploring the use of facial recognition to help users verify their identities and regain access to compromised accounts. This feature could prove essential in protecting users from identity theft and account hijacking, both of which have been growing concerns on social media platforms.

Conclusion:

Meta’s facial recognition technology represents a proactive step toward curbing deepfake celebrity ads and improving the safety of its platform. While there are still challenges to address, particularly concerning privacy and legal issues, this technology could serve as a valuable tool in the fight against cybercrime. As deepfakes and celeb-bait scams continue to evolve, Meta’s efforts to implement innovative solutions are crucial in maintaining trust among its users and protecting the identities of public figures.

Pooja is an enthusiastic writer who loves to dive into topics related to culture, wellness, and lifestyle. With a creative spirit and a knack for storytelling, she brings fresh insights and thoughtful perspectives to her writing. Pooja is always eager to explore new ideas and share them with her readers.